By Muslim Muhammad Yusuf

Why spend hours searching through a dictionary to find the right words for an impressive essay to a hiring manager, when you can “cut corners?”

“These days, most companies use Applicant Tracking System to screen CVs before a human even sees them and if your CV doesn’t have the right keywords, it may never make it to the next stage.” said Aishat Bashir Tukur, a cybersecurity expert and coach.

Rukayyat, (not her real name) actively looking for a job, has been facing a similar challenge; she first struggled during her National Youth Service Corps (NYSC) programme, applying with a basic CV that got her little traction. After several failed attempts, she started using tools like ChatGPT to improve her CV and cover letter, although she always edited the Artificial Intelligence (AI) outputs to make them more human and started seeing changes in the responses from potential employers. “I learned to personalise it, you have to sound real, not like a machine.”

From scanning resumes to shortlisting candidates, AI is now being used in hiring processes. Some recruiters suggest keywords for job descriptions, making candidates adjust their resumes to match the exact language used, even when they don’t have the required skills.

However, many potential candidates with relevant and proven skills are being screened by automated systems and chatbots that prioritise specific keywords.

Added to this, it was observed that recruiters often trust AI tools blindly, with minimal human intervention in the early stages of hiring, but the AI-powered Applicant Tracking Systems (ATS) may unintentionally favour certain applicants. However, AI-suggested candidates who don’t truly fit overwhelm some hiring managers, creating challenges in workplace hiring.

What job seekers are saying

Many job seekers in Nigeria and globally have shared how they spent months applying without success, but once they optimized their CVs or LinkedIn profiles, often with the help of tools like ChatGPT, they began getting interview invites and even job offers within weeks.

For Tunde, the job hunt was frustrating. Despite having the right skills and experience, he was barely getting responses after sending out dozens of applications. However, everything changed when he discovered how companies were now using a specific software designed to scan CVs and select candidates based on matching keywords.

“Yes, I’ve used AI to design my CV, not once, not twice,” Tunde said. “Once I knew that, I updated my CV and cover letter to match the job descriptions. I wasn’t lying in it, but using the right words to describe my real skills. That made a big difference,” he added.

Like many others in Nigeria’s job market, Charles, an intern in a Nigerian bank, struggled for months with no responses to his applications. His breakthrough came when he discovered that most companies now use AI-powered Applicant Tracking Systems.

He said, “I used AI to redesign my CV. Since then, I’ve had more callbacks and interviews. It really worked.”

Understanding the algorithm

According to ChatGPT, Applicant Tracking System Breakers compare candidate resumes against company requirements, extracting technical and soft skills from various document formats. They also provide feedback for resume improvement, including grammar corrections, to help candidates tailor their applications effectively.

Ibrahim Zubairu is a technical product manager and founder of Malamiromba, one of the virtual tech communities in Northern Nigeria. Zubairu, who works with UK-based AI companies and fintechs, delved into the technical workings of AI-powered hiring systems, particularly how they are built.

He explained that developers like himself do not simply view these systems as digital filters. Instead, they see them as comprehensive tools for talent acquisition, a broader concept that includes long-term planning, brand alignment, and candidate nurturing.

According to him, ATS platforms operate using several layers of logic which includes parsing resumes into structured data such as name, skills, job history, matching keywords to job roles, and filtering out candidates who don’t meet specific criteria. This entire process, he said, relies heavily on natural language processing (NLP) libraries like SpaCy and NLTK, and machine learning (ML) frameworks like TensorFlow.

This exposes the logic behind the rankings, Zubairu explained, adding that “a simple keyword-based system can be easily gamed, while a machine learning model can inherit deep biases from its training data.”

To address fairness in these tools, he stressed the importance of infusing Diversity, Equity, and Inclusion (DEI) frameworks within AI systems. “Because, if we build a system using foreign datasets, it might favour elite schools or specific regions,” Zubairu declared.

He said, by integrating DEI principles, developers can ensure the system doesn’t unfairly screen out qualified candidates due to biased historical data. “Bias can creep in subtly, that’s why DEI matters, to adjust the model and make it more inclusive,” he added.

Zubairu advocated a blended approach that combines AI-powered filtering with human judgment.

Be authentic, recruiters tell applicants

A senior HR official at a luxury hospitality brand in Abuja, who confirmed that the organisation uses tools like Taleo, an Oracle-powered Applicant Tracking System (ATS), to support recruitment, maintained that automation plays a role in organising and flagging potential candidates, but emphasised that shortlisting and hiring decisions remain human-led.

The company, preferring to remain unnamed, stated that the system it uses enhances efficiency, especially in high-volume applications, but safeguards are in place to avoid bias, such as manual reviews of lower-ranked CVs, diverse hiring panels, and unconscious bias training for managers.

To make it easier for applicants, the company added that “We avoid overly rigid keyword filters, and focus on a balanced review process to ensure qualified candidates aren’t screened out unfairly.”

However, an official of another international public company based in Abuja, Nigeria, explained that despite their size and global reach, they are yet to fully trust AI in their recruitment process.

“AI cannot be trusted, we can’t afford to exclude potentially great candidates simply because of a machine’s limitations,” an official with the company said.

The official, who wants to remain anonymous, said though the company receives between 300 to 1,000 applications within a week whenever it’s recruiting, it still prefers to keep the process manual, ensuring that every applicant is considered fairly. “We want to maintain a unified approach on AI, but we’re not there yet,” he stated.

For job seekers, the officials advised: “Be authentic. We value attitude and potential just as much as qualifications.”

Zubairu said he directly tests the tool’s core function. Failures here provide concrete evidence that the AI is blind to qualified candidates who don’t fit a rigid, often Western-centric, resume style.

When asked whether applicants can beat these systems, the AI expert mentioned tools like LockedIn that guide users in real-time during interviews, even suggesting what to say next.

“These tools make it easier to pass AI filters, but they also expose some limitations. It’s a game of who’s smarter, the applicant or the recruiter,” he added.

How AI influences jobs on LinkedIn

It was discovered that most recruiters on LinkedIn use tools which work like search engines. They type in job titles, skills, tools, or qualifications to find the most relevant candidates. For instance, a recruiter looking for a “Front-end Developer” with “JavaScript” may never find a profile that just says “Website Designer” without the right tech keywords.

Zubairu mentioned Teamtailor, a tool mostly used by LinkedIn recruiters to help recruitment companies streamline their hiring process by automating job postings, tracking applications, based on skills, experience, and interests. Users spend less time applying for mismatched jobs, and recruiters will trust profiles more.

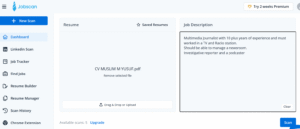

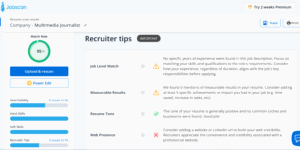

This reporter emailed AI-powered hiring tools companies, like Jobscan, Harver, LinkedIn and ResumedWorded to understand how their system works. However, of all the platforms approached, only Jobscan responded, but did not provide clarity on many questions sent about the transparency of AI-powered systems. It casually explained how the Applicant Tracking Systems work.

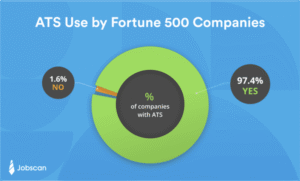

In its response, Jobscan emphasised that most people applying for jobs online are likely doing so through an ATS. The company noted that in 2023, they detected ATS use in over 97 percent of Fortune 500 companies.

Jobscan, in its mail, explained that resumes that match closely are ranked higher, making them more visible to hiring managers. “Those that don’t include the right language or formatting may be deprioritized, regardless of the applicant’s real-world competence,” the company added.

Why good CVs get ignored in the AI age

Aishat Bashir Tukur, a cybersecurity expert and coach, said many job seekers in Nigeria are struggling to get noticed, not because they lack skills, but because their CVs can’t pass through the software used by employers.

The tools used for CV screening today combine AI, ML, NLP and advanced search techniques to automate the resume extraction, evaluation and matching. However, some believed these technologies, on the other hand, reduce human bias and enhance the quality of shortlisting.

This reporter ran a CV check on Jobscan with some matching keywords in the summary. Despite rating the CV, which was not designed by AI, as a 95% match, it was observed that it can easily provide a recruiter with tips on whether to pick or ignore a CV.

Even though AI can help speed up hiring, Aishat believed it’s not always fair to understand the full value of what a person brings. She also warned applicants against using vague phrases like “team player” or “works under pressure.” Instead, she recommends being clear and giving real examples.

When it comes to employers, she advised they should be more realistic, especially with entry-level jobs; applicants would not use AI to generate what they may not have. “You can see jobs asking fresh graduates to have years of experience or special certificates. That’s not fair,” she said.

Results from the CV scan indicate that even when an applicant submits a manually crafted CV, it’s crucial to align the content with relevant keywords and skills listed in the job advertisement.

To counter some of these challenges, Zubairu explained that he co-built a platform called Workdey, designed to function as a local alternative to platforms like Fiverr and Upwork. The goal, he said, is to help Nigerian freelancers overcome challenges related to cross-border jobs with the aim of solving both the gig-job connection and the financial remittance problem faced by many African freelancers.

Nigeria’s legal framework and AI regulation

Currently, Nigeria’s employment laws do not specifically address AI-related issues. However, Aisha Morohunfola, a Legal Compliance & Startup Advisor, said certain provisions can be leveraged to mitigate AI’s adverse effects on workers.

While analysing the role of AI in hiring processes via a LinkedIn post, she cited the Nigerian Labour Act, which provides a general framework for employment relations, including protections against unfair termination. According to her, it can be expanded to include provisions for AI-driven job displacement.

While primarily focused on data privacy, the Nigerian Data Protection Regulation (NDPR), she said, can also be applied to AI systems that process employee data. “Employers using AI for recruitment or performance monitoring must comply with NDPR’s data protection principles, such as obtaining consent and ensuring data accuracy,” she added.

To address the challenges posed by AI in the workplace, Morohunfola recommended that Nigeria must develop AI-specific employment regulations and introduce laws that specifically address AI’s impact on employment, focusing on transparency, accountability, and fairness.

Transparency alone won’t make AI accountable

As artificial intelligence continues to shape public life, three influential reports analysed by this reporter have raised critical concerns about the transparency and accountability of AI systems.

Mozilla’s 2020 white paper “Creating Trustworthy AI” criticised tech companies for hiding how their systems work behind complex models and legalese, leaving users powerless to understand or contest decisions.

In their 2021 paper, “Towards Accountability in the Use of AI for Public Administrations”, researchers Michele Loi and Matthias Spielkamp of AlgorithmWatch argue that human accountability is weakened when decisions are delegated to automated systems.

Additionally, a 2024 study by Ramak Molavi Vasse’i and Gabriel Udoh focuses on AI-generated content and the methods used to disclose its synthetic nature. The report “In Transparency We Trust?” warns that over-reliance on transparency alone may lead to a false sense of control.

The researchers highlight the risks of distributed responsibility where many actors are involved, making it difficult to trace who is responsible for harmful outcomes. The AlgorithmWatch report stresses that transparency alone isn’t enough. It draws a distinction between public transparency, where humans can scrutinize AI systems and auditor transparency which enables independent oversight.

Note: Some names used in the story are pseudonyms to protect the employee and the employer’s identities.

This report was produced with support from the Centre for Journalism Innovation and Development (CJID) and Luminate.

Source: Daily Trust